En este vlog la neta no vas a encontrar la informacion a la pie de la letra pero si encontraras informacion resumida sobre la computacion y entre otros aspectos

lunes, 28 de noviembre de 2022

jueves, 24 de noviembre de 2022

lunes, 21 de noviembre de 2022

Artificial Intelligence (AI)

Artificial intelligence leverages computers and machines to mimic the problem-solving and decision-making capabilities of the human mind.

What is artificial intelligence?

Although a number of definitions of artificial intelligence (AI) have emerged over the past few decades, John McCarthy offers the following in this article published in 2004: "It is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI need not be limited to methods that are biologically observable."

However, decades before this definition, the conversation of artificial intelligence began with Alan Turing's landmark paper, "Computational Machinery and Intelligence" (PDF, 89.8 KB) (external link to IBM), which was published in 1950. In this paper, Turing, often referred to as the "father of computer science," asks the question, "Can a machine think?" From there, he offers a test, now known as the "Turing test," where a human interrogator would try to distinguish between a computer's text response and that of a human. While this test has come under much scrutiny since its publication, it remains an important part of the history of AI, as well as an ongoing concept within philosophy, as it uses ideas around linguistics.

In its simplest form, artificial intelligence is a field that combines computer science and robust data sets to enable problem solving. It also encompasses the subfields of machine learning and deep learning, which are often mentioned in conjunction with artificial intelligence. These disciplines are composed of AI algorithms that seek to create expert systems that make predictions or classifications based on input data.

Today, there is still a lot of hype surrounding the development of AI, which is expected of any new emerging technology in the market. As noted in Gartner's Hype Cycle (external link to IBM), product innovations such as, for example, autonomous vehicles and personal assistants, follow "a typical progression of innovation, from over-enthusiasm, to a period of disillusionment to an eventual realization of the innovation's relevance and role in a market or domain." As Lex Fridman points out here (external link to IBM) in his 2019 MIT lecture, we are at the peak of over-hyped expectations, approaching disillusionment

Deep learning vs. machine learning

Since deep learning and machine learning tend to be used interchangeably, it is worth noting their differences. As mentioned above, both are subfields of artificial intelligence, and deep learning is actually a subfield of machine learning.

Deep learning is actually composed of neural networks. "Deep" refers to a neural network composed of more than three layers, which would include inputs and output, which can be considered a deep learning algorithm. This is usually represented by the following diagram:

The difference between deep learning and machine learning is how each algorithm learns. Deep learning automates much of the feature extraction phase of the process, which eliminates some of the manual human intervention required and allows the use of larger data sets. Deep learning could be considered "scalable machine learning," as Lex Fridman pointed out at the same MIT conference mentioned above. Traditional, or "non-deep" machine learning relies more on human intervention to learn. Human experts determine the hierarchy of features to understand the differences between data inputs, which generally requires more structured data to learn.

"Deep" machine learning can use labeled data sets, also known as supervised learning, to inform its algorithm, but does not necessarily require a labeled data set. It can ingest unstructured data in its original form (such as text or images) and can automatically determine the hierarchy of features that distinguish different categories of data. Unlike machine learning, it does not require human intervention to process data, allowing it to scale in more interesting ways.

Artificial intelligence applications

Today there are numerous practical applications of AI systems. Some of the most common examples are:

Speech recognition: also called automatic speech recognition (ASR), computer speech recognition, or speech-to-text conversion, and is a functionality that uses natural language processing (NLP) to process human speech into a written format. Many mobile devices incorporate speech recognition into their systems to perform voice searches, e.g. Siri, or provide more accessibility in relation to text messaging.

Customer service: online chatbots are replacing human agents for customer routing. They answer frequently asked questions on different topics (such as shipping) or provide personalized advice, cross-sell products or suggest sizes for users, changed the way they interact with customers on websites and social media platforms. Examples include messaging bots on e-commerce sites with virtual agents, messaging apps (such as Slack and Facebook Messenger), and tasks generally performed by virtual assistants and voice assistants.

Computer vision: this AI technology enables computers and systems to obtain meaningful information from digital images, videos, and other visual inputs, and to act on it. This ability to provide recommendations distinguishes it from image recognition tasks. Driven by convolutional neural networks, machine vision can be applied to photo tagging in social networks, radiological imaging in healthcare, and autonomous vehicles in the automotive industry.

Recommendation engines: using data from past consumer behavior, AI algorithms can help uncover data trends to develop more effective cross-selling strategies. This is used to enable online retailers to make additional relevant recommendations to customers during the buying process.

Automated stock trading: designed to optimize stock portfolios, AI-powered high-frequency trading platforms make thousands or even millions of trades per day without human intervention.

domingo, 20 de noviembre de 2022

Timeline of operating systems

At the beginning, computers did not have Operating Systems, but at the beginning of the 50's the term OS appeared, which worked with EXEC 1, but it was not until EXEC II when it started to be known, created by UNIVAC.

2nd Generation

1960 - 1965

At the beginning of this second generation, UNIVAC builds a new version with the EXEC operating system but improved to the first generation, EXEC II. Years later, EXEC 8 appears.

The BATCH system appears in this generation with the aim of facilitating the use of computers by users and is characterized by batch processing.

The first integrated circuits also appeared.

3rd GENERATION

1965 - 1971

The majority of Operating Systems are single-user.

Multiprocessors appear

OS/360 is developed in 1965 by IBM

MULTICS appeared, which would be the precursor of UNIX, whose development would take place between 1969-1970.

CP/CMS and VM/CMS, new Operating Systems made by IBM.

4th Generation

1971 - 1981

Operating systems are multitasking, multiuser and the interface is text mode.

UNIX is rewritten in C , in addition to being multitasking and multiuser.

CP/M is created.

BSD UNIX is created by the University of California. Operating system that arose when the UNIX license was withdrawn by AT&T.

VMS or OpenVMS is launched and would last well into the next generation.

5th Generation

1981 - 2000

The IBM PC was released with the PC-DOS operating system.

MS-DOS appears, with text-mode interface, developed by Microsoft, later incorporating the Windows operating system.

The Novell Netware network operating system appears.

The GNU project is created by Richard Stallman.

Linux is born, created by student Linus Torvalds.

The Linux kernel joins the GNU project and GNU/Linux is born.

Steve Jobs takes over the Macintosh project.

Mac System Software, the first edition of the Mac OS operating system, owned by Apple, is released.

GNU/Linux

1990 - 1999

1989: Richard Stallman writes the first version of the GNU GPL license.

1991: The Linux kernel is publicly announced on August 25 by the then 21-year-old Finnish student Linus Benedict Torvalds.

1992: The first Linux distributions are created.

1994: In March of this year, Torvalds considers all components of the Linux kernel to be totally outdated and introduces Linux version 1.0.

1997: Several proprietary programs are released for Linux on the market, such as the Adabas D database, the Netscape browser.

1998: Major computer companies such as IBM, Compaq and Oracle announce support for Linux.

1999: Linux kernel 2.2 series is released in January, with improved dered code and SMP support.

2001: In January, the 2.4 series of the Linux kernel is released. The Linux kernel now supports up to 64 Gb RAM, 64-bit systems, and USB devices.

2008: The Free Software Foundation releases Linux-libre.

Windows

1995 - 2020

In 1998 Windows 98 appears as the successor to Windows 5, which was released in November 1997.

Between 1999 and 2000, Windows ME is released, whose name means "Millennium Edition".

From 2001 to 2009, the most used Operating System at that time was Windows XP.

In January 2007 comes out an Operating System quite well known but not for its good performance, as it received countless criticisms from users, Windows Vista.

In 2009 comes out one of the most famous Operating Systems, Windows 7, thanks to its predecessor was not up to par.

In 2012 comes out Windows 8 , this particular also received harsh criticism , as it had removed the Start menu . Microsoft acknowledged the mistake and promised to include it again in the next version.

In July 2015 Windows 10 was released, the latest version of Microsoft's operating system.

sábado, 19 de noviembre de 2022

The history of Windows

Originally, Microsoft was called "Traf-O-Data" in 1972, then "Micro-soft" in November 1975 and finally on November 26, 1976 it received the name "Microsoft".

Microsoft entered the market in August 1981 by releasing version 1.0 of the Microsoft DOS (MS-DOS) operating system, an operating system with a 16-bit command line.

The first version of Microsoft Windows (Microsoft Windows 1.0) was released in November 1985. It featured a graphical user interface, inspired by the user interface of Apple computers at the time. Windows 1.0 was not successful with the public and Microsoft Windows 2.0, launched on December 9, 1987, did not have better luck.

It was on May 22, 1990 when Microsoft Windows became a success, with Windows 3.0, then Windows 3.1 in 1992 and finally Microsoft Windows for Workgroups, later called Windows 3.11, which included networking capabilities. Windows 3.1 cannot be considered a completely individual operating system as it was only a graphical user interface running on top of MS-DOS.

On August 24, 1995, Microsoft released the Microsoft Windows 95 operating system. With Windows 95 Microsoft wanted to transfer some MS-DOS capabilities to Windows. However, this new version relied too much on 16-bit DOS and still had the limitations of the FAT16 file system, so it was not possible to use long file names.

After some minor revisions of Windows 95, called Windows 95A OSR1, Windows 95B OSR2, Windows 95B OSR2.1 and Windows 95C OSR2.5, Microsoft released the next version of Windows on June 25, 1998: Windows 98. Initially, Windows 98 natively supported options other than MS-DOS, but it was still based on MS-DOS. In addition, Windows 98 had poor memory management when running multiple applications. This could cause system crashes. On February 17, 2000, a second edition of Windows 98 was released. It was called Windows 98 SE ("Second Edition").

On September 14, 2000, Microsoft released Windows Me (for Millennium Edition), also known as Windows Millennium. Windows Millennium was largely based on Windows 98 (and therefore MS-DOS), although it added additional multimedia and software capabilities. Also, Windows Millennium included a system restore mechanism to revert to a previous state in the event of a system crash.

At the same time as releasing these versions, Microsoft had been selling (since 1992) a full 32-bit operating system (not based on MS-DOS) for professional use, at a time when companies were mainly using mainframe systems. It was called Windows NT (for Windows "New Technology"). Windows NT was neither a new version of Windows 95 nor an improvement on it, but a completely different operating system.

On May 24, 1993, the first version of Windows NT was released. It was called Windows NT 3.1. This was followed by Windows NT 3.5 in September 1994 and Windows 3.51 in June 1995. With Windows NT 4.0, which was released on August 24, 1996, Windows NT became a real success.

In July 1998, Microsoft released Windows NT 4.0 TSE (Terminal Server Emulation). This was the first Windows system to allow terminals to connect to a server, i.e. to use thin clients to open a session on the server.

On February 17, 2000, the next version of NT 4.0 was given the name Windows 2000 (instead of Windows NT 5.0) to highlight the unification of the "NT" and "Windows 9x" systems. Windows 2000 is a full 32-bit system with Windows NT features, an improved task manager and full support for USB and FireWire peripherals.

Then, on October 25, 2001, Windows XP hit the market. This version was a merger of the previous operating systems.

jueves, 17 de noviembre de 2022

1801: In France, Joseph Marie Jacquard invents a loom that uses punched wooden cards to automatically weave fabric designs. Early computers would use similar punch cards.

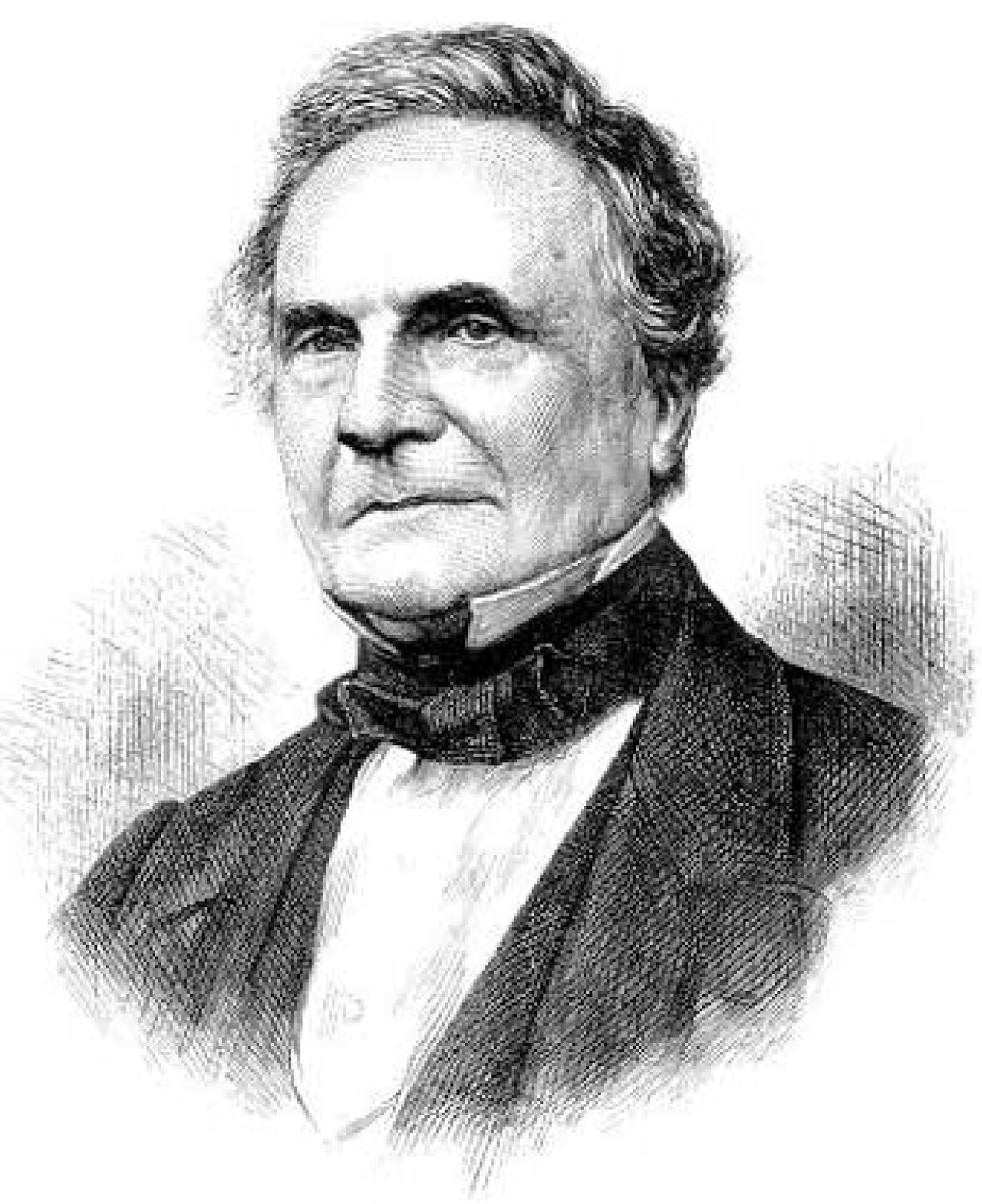

1822 English mathematician Charles Babbage conceives of a steam-powered calculating machine that could calculate tables of numbers. The project, financed by the English government, is a failure. More than a century later, however, the world's first computer was built.

1890: Herman Hollerith designs a punch card system to compute the 1880 census, accomplishing the task in just three years and saving the government $5 million. He establishes a company that would eventually become IBM.

1936: Alan Turing presents the notion of a universal machine, later called the Turing machine, capable of computing anything that is computable. The central concept of the modern computer was based on his ideas.

1937: J.V. Atanasoff, a professor of physics and mathematics at Iowa State University, attempts to build the first computer without gears, cams, belts, or shafts.

1939: Hewlett-Packard is founded by David Packard and Bill Hewlett in a garage in Palo Alto, California, according to the Computer History Museum.

1941: Atanasoff and his graduate student Clifford Berry design a computer that can solve 29 equations simultaneously. This marks the first time that a computer can store information in its main memory.

1943-1944: Two University of Pennsylvania professors, John Mauchly and J. Presper Eckert, build the Electronic Numerical Integrator and Calculator (ENIAC). Considered the grandfather of digital computers, it fills a room 20 feet by 40 feet and has 18,000 vacuum tubes.

1946: Mauchly and Presper leave the University of Pennsylvania and receive funding from the Census Bureau to build the UNIVAC, the first commercial computer for business and government applications.

1953: Grace Hopper develops the first computer language, eventually known as COBOL. Thomas Johnson Watson Jr., son of IBM CEO Thomas Johnson Watson Sr., conceives the IBM EDPM 701 to help the United Nations keep track of Korea during the war.

1954: The FORTRAN programming language, an acronym for FORmula TRANslation, is developed by a team of programmers at IBM led by John Backus, according to the University of Michigan.

1958: Jack Kilby and Robert Noyce reveal the integrated circuit, known as the computer chip. Kilby was awarded the Nobel Prize in Physics in 2000 for his work.

1964: Douglas Engelbart demonstrates a prototype of the modern computer, complete with a mouse and a graphical user interface (GUI). This marks the evolution of the computer from a specialized machine for scientists and mathematicians to a technology more accessible to the general public.

1971: Alan Shugart leads a team of IBM engineers who invent the "floppy disk," allowing data to be shared between computers.

1973: Robert Metcalfe, a member of the Xerox research staff, develops Ethernet to connect various computers and other hardware.

1975: The January issue of Popular Electronics magazine features the Altair 8080, described as the "world's first minicomputer kit to compete with commercial models." Two "computer geeks", Paul Allen and Bill Gates, offer to write software for Altair, using the new BASIC language. On April 4, after the success of this first effort, the two childhood friends form their own software company, Microsoft.

1976: Steve Jobs and Steve Wozniak start Apple Computers on April Fool's Day and release the Apple I, the first computer with a single circuit board, according to Stanford University.

The TRS-80, introduced in 1977, was one of the first machines whose documentation was intended for non-geeks.

1977: Radio Shack's initial production run of the TRS-80 was just 3,000. It was selling like crazy. For the first time, non-geeks could write programs and make a computer do what they wanted.

1977: Jobs and Wozniak incorporate Apple and display the Apple II at the first West Coast Computer Show. It offers color graphics and incorporates an audio cassette drive for storage.

1978: Accountants rejoice with the introduction of VisiCalc, the first computerized spreadsheet program.

1979: Word processing becomes a reality as MicroPro International launches WordStar. "The ultimate change was adding margins and word wrapping," creator Rob Barnaby said in an email to Mike Petrie in 2000. "Additional changes included getting rid of the command mode and adding a print feature. I was the technical brain." I figured out how to do it, and did it, and documented it."

1983: Apple's Lisa is the first personal computer with a GUI. It also features a dropdown menu and icons. Flops but eventually evolves to the Macintosh. The Gavilan SC is the first laptop in the family twist form factor and the first to be marketed as a "laptop".

1985: Microsoft announces Windows, according to the Encyclopedia Britannica. This was the company's response to Apple's graphical user interface. Commodore introduces the Amiga 1000, which features advanced audio and video capabilities.

1985: The first dot-com domain name is registered on March 15, years before the World Wide Web marked the formal start of Internet history. The Symbolics Computer Company, a small Massachusetts computer manufacturer, registers Symbolics.com. More than two years later, only 100 dot coms had been registered.

1986: Compaq brings the Deskpro 386 to market. Its 32-bit architecture provides speed comparable to that of mainframes.

1990: Tim Berners-Lee, a researcher at CERN, the high-energy physics laboratory in Geneva, develops Hypertext Markup Language (HTML), giving birth to the World Wide Web.

1993: The Pentium microprocessor advances the use of graphics and music in PCs.

1994: PCs become gaming machines as "Command & Conquer," "Alone in the Dark 2," "Theme Park," "Magic Carpet," "Descent" and "Little Big Adventure" are among the games to go to market.

1996: Sergey Brin and Larry Page develop the Google search engine at Stanford University.

1997: Microsoft invests $150 million in Apple, which was struggling at the time, ending Apple's court case against Microsoft alleging that Microsoft had copied the "look and feel" of its operating system.

1999: The term Wi-Fi becomes part of computer parlance and users begin to connect to the Internet without wires.

2001: Apple introduces the Mac OS X operating system, which provides protected memory architecture and preventative multitasking, among other benefits. Not to be outdone, Microsoft rolls out Windows XP, which has a significantly redesigned GUI.

2003: The first 64-bit processor, AMD's Athlon 64, will be available for the consumer market.

2004: Mozilla's Firefox 1.0 challenges Microsoft's Internet Explorer, the dominant web browser. Facebook, a social networking site, launches.

2005: YouTube, a video-sharing service, is founded. Google acquires Android, a Linux-based operating system for mobile phones.

2006: Apple introduces the MacBook Pro, its first Intel-based dual-core mobile computer, as well as an Intel-based iMac. Nintendo's Wii game console hits the market.

2007: The iPhone brings many computer features to the smartphone.

2009: Microsoft releases Windows 7, which offers the ability to pin apps to the taskbar and advances in handwriting and touch recognition, among other features.

2010: Apple introduces the iPad, changing the way consumers view media and boosting the idle tablet segment

2011: Google launches the Chromebook, a laptop running the Google Chrome operating system.

2012: Facebook gains 1 billion users on October 4.

2015: Apple launches the Apple Watch. Microsoft releases Windows 10.

2016: The first reprogrammable quantum computer was created. "Until now, there hasn't been any quantum computing platform that had the ability to program new algorithms into its system. Typically, each one is designed to attack a particular algorithm," said study lead author Shantanu Debnath, a Quantum Physicist and Optical Engineer at the University of Maryland, College Park.

The most lethal viruses Download here the file

.webp)

.jpeg)

.jpeg)